Raymarching Beginners' Thread

category: code [glöplog]

ahh huh. the gpu burns rays while the cpu is sleeping. yeah. nice topic. I enjoy that alot.

demo later. I'm a slowdev.

demo later. I'm a slowdev.

graga: that was for video processing rather than any kind of 3d, and on iPad (so no CUDA/CL, but a fixed CPU/GPU combo makes things easier to plan). Basically I totally changed the way the engine works, so there was a lot of rewriting yes. Originally, the GPU was doing various effects (blurs, colour transforms, brightness curves and such) in the normal way. I changed that so the CPU was pre-computing things like the curves, and the GPU was only doing things like the blurs and a couple of lookups to apply the curves.

For raymarching, you could do a low-res pre-pass on the CPU (this has been discussed a fair bit already, and I've implemented it (on GPU) for my ray tracer with an excellent speedup). Maybe ray trace the scene just using bounding boxes, and upload that to the GPU as a texture. You don't need to do lighting or anything, just get the distance and the object ID. Then the GPU side has a big 'head start' because it can start the ray much deeper into the scene in most cases, or even skip the ray entirely if you know it doesn't hit anything, or it just hits a sphere or cube or plane.

As I said, this has been covered already, but if you move it to the cpu you're freeing up GPU time for other things (post AA? More fx/objects/iterations/lighting etc?)

Toxie: yeah, that could get painful. Where I've done this was on a fixed platform where it's nice and easy to really maximise it. On PC I guess you'd have to assume 'worst case' (say a moderate core 2, with say 1 core free after the main demo code + music player?) That or spend a bunch of time making it really flexible, which doesn't sound like a lot of fun ;)

If you take the example I gave above, that should be fine for a single core. If there's a bunch of extra CPU power available you could increase the resolution of the pre-pass texture. That should scale quite nicely, and it'd help a lot to have more resolution around object edges (which are the most expensive in the raymarcher).

Of course all this is only worthwhile if you're looking for that little bit more speed :)

For raymarching, you could do a low-res pre-pass on the CPU (this has been discussed a fair bit already, and I've implemented it (on GPU) for my ray tracer with an excellent speedup). Maybe ray trace the scene just using bounding boxes, and upload that to the GPU as a texture. You don't need to do lighting or anything, just get the distance and the object ID. Then the GPU side has a big 'head start' because it can start the ray much deeper into the scene in most cases, or even skip the ray entirely if you know it doesn't hit anything, or it just hits a sphere or cube or plane.

As I said, this has been covered already, but if you move it to the cpu you're freeing up GPU time for other things (post AA? More fx/objects/iterations/lighting etc?)

Toxie: yeah, that could get painful. Where I've done this was on a fixed platform where it's nice and easy to really maximise it. On PC I guess you'd have to assume 'worst case' (say a moderate core 2, with say 1 core free after the main demo code + music player?) That or spend a bunch of time making it really flexible, which doesn't sound like a lot of fun ;)

If you take the example I gave above, that should be fine for a single core. If there's a bunch of extra CPU power available you could increase the resolution of the pre-pass texture. That should scale quite nicely, and it'd help a lot to have more resolution around object edges (which are the most expensive in the raymarcher).

Of course all this is only worthwhile if you're looking for that little bit more speed :)

Thats a really nice idea! Right now all my cpu is doing is drawing a quad now and then, I'm sure there is a lot I could be offloading from the GPU. Any ideas how to get starting on the CPU pre-tracing?

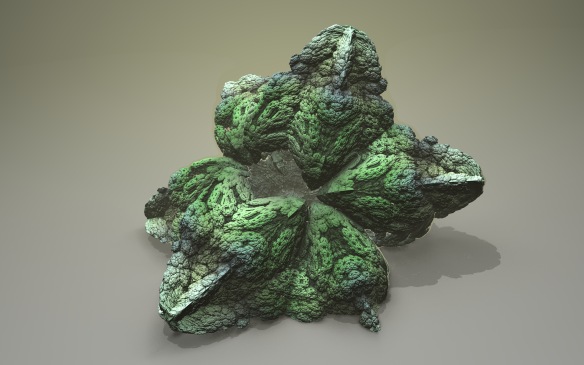

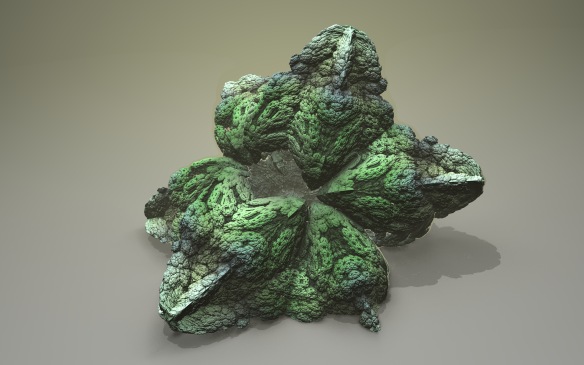

Some really nice distance field fractal stuff here! Focused more on non realtime stuff though.

http://blog.hvidtfeldts.net/

http://blog.hvidtfeldts.net/

mewler: guess it'll depend entirely on what your main renderer is doing. You have to consider what it does, what parts are slowest, and what data you could feed it to speed that up.

For bounding boxes, all you do is render to a texture on the CPU, set the camera up exactly the same as you do in the shader, then just do basic cube/sphere/plane intersection tests to find out which object the ray hits and what the distance is. Then pass that to your raymarcher, and you can start the ray at that distance. If you know it's safe (say for a plane or sphere) you don't need to march at all, because the interpolated distance from your texture is close enough.

Another example, that fractal you just posted. You could render that at very low res/quality on the cpu, then use that texture to effectively mask out the plane in the background (which then takes near zero render time, because you already have the distance and the normal is always 0,1,0). You can also accelerate the shadow if you render that on the CPU too, because you can mask out parts where you know there's no shadow.

What I've done with my raytracer is to render first (still on gpu) at 1/8 resolution (seems to be optimal for me). All I render is distance and object iD. Then in the main pass, I check if the object ID is an integer value - it always is for objects, so if it isn't then the current pixel is on an edge between two objects (i.e. the texture filtering is doing it's job) and I do a full raytrace. Anywhere else, I don't raytrace at all because the interpolated distance is accurate enough (I'm not using very complex objects), so I just get the normal and do light + shadow. Probably less than half of the pixels get raytraced, the rest get interpolated :)

For bounding boxes, all you do is render to a texture on the CPU, set the camera up exactly the same as you do in the shader, then just do basic cube/sphere/plane intersection tests to find out which object the ray hits and what the distance is. Then pass that to your raymarcher, and you can start the ray at that distance. If you know it's safe (say for a plane or sphere) you don't need to march at all, because the interpolated distance from your texture is close enough.

Another example, that fractal you just posted. You could render that at very low res/quality on the cpu, then use that texture to effectively mask out the plane in the background (which then takes near zero render time, because you already have the distance and the normal is always 0,1,0). You can also accelerate the shadow if you render that on the CPU too, because you can mask out parts where you know there's no shadow.

What I've done with my raytracer is to render first (still on gpu) at 1/8 resolution (seems to be optimal for me). All I render is distance and object iD. Then in the main pass, I check if the object ID is an integer value - it always is for objects, so if it isn't then the current pixel is on an edge between two objects (i.e. the texture filtering is doing it's job) and I do a full raytrace. Anywhere else, I don't raytrace at all because the interpolated distance is accurate enough (I'm not using very complex objects), so I just get the normal and do light + shadow. Probably less than half of the pixels get raytraced, the rest get interpolated :)

Just to mention it here - Corollary 4.1 from the zeno paper is pretty useful for convex objects! ;)

To me the "raytrace bounding volume on GPU and store distance and ID to texture" sounds like a good idea. Gets rid of a lot of field function calls.

Only if you have very simple (boring!) objects - otherwise you'll miss features that way.

That's the nice thing about raymarching the objects in the rough grid to determine a *list* of active objects for the tile. Because with the distance function you directly know if any pixel in the tile have a chance of hitting the object - that's just a question of comparing the distance with the (current) width of the tile frustum.

And speeding up the already pretty fast parts of the scene where nothing is happening isn't all that important either imo - depending on the scene ofcourse.

That's the nice thing about raymarching the objects in the rough grid to determine a *list* of active objects for the tile. Because with the distance function you directly know if any pixel in the tile have a chance of hitting the object - that's just a question of comparing the distance with the (current) width of the tile frustum.

And speeding up the already pretty fast parts of the scene where nothing is happening isn't all that important either imo - depending on the scene ofcourse.

uncovering uses marching cubes on gpu (basically the same as our fluid routines on numb res altho we added some new really cool things to make it faster and better) for the first hit. then it uses some raymarching to refine the result and calculate an accurate normal.

basically the reason for everything is performance. as you might have discovered, raymarching is fine for simple stuff, but if you make something complex the shader size explodes and breaks the compiler or the shader performance - whichever comes first. :) 4ks probably only get away with it because of the simplicity of their scenes. so that was the major problem here we had to tackle: the technique simply does not upscale directly. there's a reason 4k procedural gfx scenes do not run realtime and take forever to precalc.. :)

our technique also has a lot of benefits, e.g. we have the geometry to do fx with and render flat etc.

it also uses raymarching for ao. distance field ao, the fake one, looks like ass. admit it. :) its like ssao for distance fields. so we used a monte carlo variant - much cooler if you work out the tricks to get performance.

we use shadow mapping for shadows. reflections, we had it but it took too many iterations so it exploded the compiler. make some real scenes with raymarching and you'll see how it breaks down, trust me.. :)

basically the reason for everything is performance. as you might have discovered, raymarching is fine for simple stuff, but if you make something complex the shader size explodes and breaks the compiler or the shader performance - whichever comes first. :) 4ks probably only get away with it because of the simplicity of their scenes. so that was the major problem here we had to tackle: the technique simply does not upscale directly. there's a reason 4k procedural gfx scenes do not run realtime and take forever to precalc.. :)

our technique also has a lot of benefits, e.g. we have the geometry to do fx with and render flat etc.

it also uses raymarching for ao. distance field ao, the fake one, looks like ass. admit it. :) its like ssao for distance fields. so we used a monte carlo variant - much cooler if you work out the tricks to get performance.

we use shadow mapping for shadows. reflections, we had it but it took too many iterations so it exploded the compiler. make some real scenes with raymarching and you'll see how it breaks down, trust me.. :)

Still a bit slow. :)

Next step: Something completely different.

Greetings to Decipher :D

Smash: build a real scene that doesn't fit in a nice cubic volume and see what happens ;) Fully agree with your point though. Raymarching is great for abstract stuff, not so great for the rest. Abstract is fine in the scene though, and kind of necessary for 4k. One day we'll figure this shit out and have a method that handles complex geometry, big scenes, and decent lighting. That will be a sad day, because the fun is in the journey :)

What do you mean by distance field AO? The regular 'step along normal accumulating the distance value' type AO? That looks good in some cases, but like ass in others (a cube on a flat plane looks all kinds of wrong). There's some good improvements to it though, like stepping towards the light source and only adding distances while the distance is getting smaller (which prevents self-shadowing and such crappiness, and gives a passable soft shadow instead or regular AO).

Will you be doing another blog post and covering your lighting? I'd be very interested to hear how the marching cubes works out too. Maybe that will have some bearing on what I've been working on lately (kind of hybrid raytrace + raymarch, I've figured out how to do very large scenes that are very detailed with tons of custom geometry and decent speed.. which sounds awesome, except that it's extremely limited in what kind of geometry. Still beats cubes + sin distortion and perlin noise though :)

What do you mean by distance field AO? The regular 'step along normal accumulating the distance value' type AO? That looks good in some cases, but like ass in others (a cube on a flat plane looks all kinds of wrong). There's some good improvements to it though, like stepping towards the light source and only adding distances while the distance is getting smaller (which prevents self-shadowing and such crappiness, and gives a passable soft shadow instead or regular AO).

Will you be doing another blog post and covering your lighting? I'd be very interested to hear how the marching cubes works out too. Maybe that will have some bearing on what I've been working on lately (kind of hybrid raytrace + raymarch, I've figured out how to do very large scenes that are very detailed with tons of custom geometry and decent speed.. which sounds awesome, except that it's extremely limited in what kind of geometry. Still beats cubes + sin distortion and perlin noise though :)

las: what's with the colour distortion? Is it something to do with the checkerboard texture, or are you doing some kind of chromatic aberration effect in there? Chromatic effects could be a whole world of fun, but whenever I've considered it I've come to the conclusion that it's a whole world of pain too (minimum 3 rays per pixel to do it right, probably many more if you want any kind of rainbow effect..)

Quote:

Raymarching is great for abstract stuff, not so great for the rest.

I completely disagree. The rest can be raymarched as long as one can get that rest into a distance field representation.

Quote:

Abstract is fine in the scene though, and kind of necessary for 4k.

Now, now, that's a really bad excuse! :D

Quote:

One day we'll figure this shit out and have a method that handles complex geometry, big scenes, and decent lighting.

Uh, who said we didn't already? I mean using SDFs to shade rasterised geometry is not something to come. It is already in use. Even within the demoscene. :)

3 rays per pixel is not really a problem ;)

Try a scene that can't be done with regular objects, noise, and boolean operators in a raymarcher. A scene with a lot of different, difficult objects.. then refer to smash's comment about the shader compiler exploding ;) You could do it as a 3d texture or something, but then you're heavily limiting resolution of the geometry. It's great for a lot of stuff, but it does only go so far.

I dunno about abstract stuff being an excuse.. abstract can be just as hard as realistic, depending on what you do. Realistic can be boring. Look at that fairlight 64k, it's a mix... the scenes have quite realistic parts, but are overall quite abstract, the textures and lighting are quite realistic, the effects are abstract... And anyway, what's wrong with a whole demo based on cubes? :D

I don't get your last sentence though.. SDFs = spherical distance fields? Who's using them with rasterised geometry? What demo? And how? ( Surely using a distance field to shade the geometry only makes sense if you can march through complex geometry at speed, which so far as I'm aware we can't, or you use a 3d texture which is size + resolution limited again..)

I dunno about abstract stuff being an excuse.. abstract can be just as hard as realistic, depending on what you do. Realistic can be boring. Look at that fairlight 64k, it's a mix... the scenes have quite realistic parts, but are overall quite abstract, the textures and lighting are quite realistic, the effects are abstract... And anyway, what's wrong with a whole demo based on cubes? :D

I don't get your last sentence though.. SDFs = spherical distance fields? Who's using them with rasterised geometry? What demo? And how? ( Surely using a distance field to shade the geometry only makes sense if you can march through complex geometry at speed, which so far as I'm aware we can't, or you use a 3d texture which is size + resolution limited again..)

SDF = signed distance field.

Quote:

Raymarching is great for abstract stuff, not so great for the rest

i strongly disagree. stop twisting mod()-ed cubes already and go for the real thing. lazy bastards :( (i say it with lots of love, and a bit of frustration too)

I agree IQ! But as Smash said, more complex raymarched scenes are much harder to do. But as Smash demonstrationed, they are totally possible and look awesome. :) Right now I think, is a lack of information on how to do more complex stuff. Like if you check the raymarching toolbox thread (http://www.pouet.net/topic.php?which=7931&page=1&x=26&y=7) you'll see there isn't any code for working out texcoords and other stuff necessary for less abstract scenes. Lots of other useful stuff there though, thanks to Las :)

don't texcoords usually compute with a lil position calcmess and some weird projection stuff? o.O

oh boy… all I can tell is you're all digging the wrong hole! :D there you go, here's a pointer.

I guess marching cubes is not the way to go ;)

Now stuff needs a data structure - able to store and access a real-time generated sparse distance field or something like that.

Now stuff needs a data structure - able to store and access a real-time generated sparse distance field or something like that.

decipher: unless I'm missing something, you're still limited heavily by the maximum 3d texture size.. which leaves you with scenes that must fit inside a cube and limited detail.

@Psycho: I have read what you wrote about the technique, but didn't quite get it.

You render at, say 1/8? resolution and then store (how? what? where?) the object you've encountered in that tile. In full resolution render get that info (Texture? VBO?) and use a specially crafted / branched field function? How do you handle shadows/reflection/refractions then?

You render at, say 1/8? resolution and then store (how? what? where?) the object you've encountered in that tile. In full resolution render get that info (Texture? VBO?) and use a specially crafted / branched field function? How do you handle shadows/reflection/refractions then?

psonice: You're limited by how detailed you want to represent the object as in how complicated your distance function can get.

The bad thing is that imo marching cubes looks like marching cubes. Always, everywhere. It fits the 'abstract' look uncovering static has quite well though.

The bad thing is that imo marching cubes looks like marching cubes. Always, everywhere. It fits the 'abstract' look uncovering static has quite well though.

psonice, you can dynamically create a low res approximation of your scene in a 3D texture as a distance field. I believe that is how some of the lighting in LBP is done.